Evaluating #APConnect by Joss Kang

The Education and Training Foundation (ETF) has funded the Professional Development Programme for Advanced Practitioners (aka #APConnect) since 2018. This year SQW has been commissioned to evaluate #APConnect as part of the ETF’s quality assurance processes.

I’m always curious about the theory and methodology used to evidence the impact of national programmes. So much so that our team at touchconsulting Ltd built an exploration of the methodologies used by earlier evaluators into the content of day three of the ETF’s popular ‘Developing APs’ part-residential CPD. Afterall, an integral part of the AP role is to think hard about how one will evidence the differences strategies/approaches/interventions are beginning to make. Ideally the indicators are agreed at the beginning of the process, and in a way that is time and resource savvy. Time is so limited!

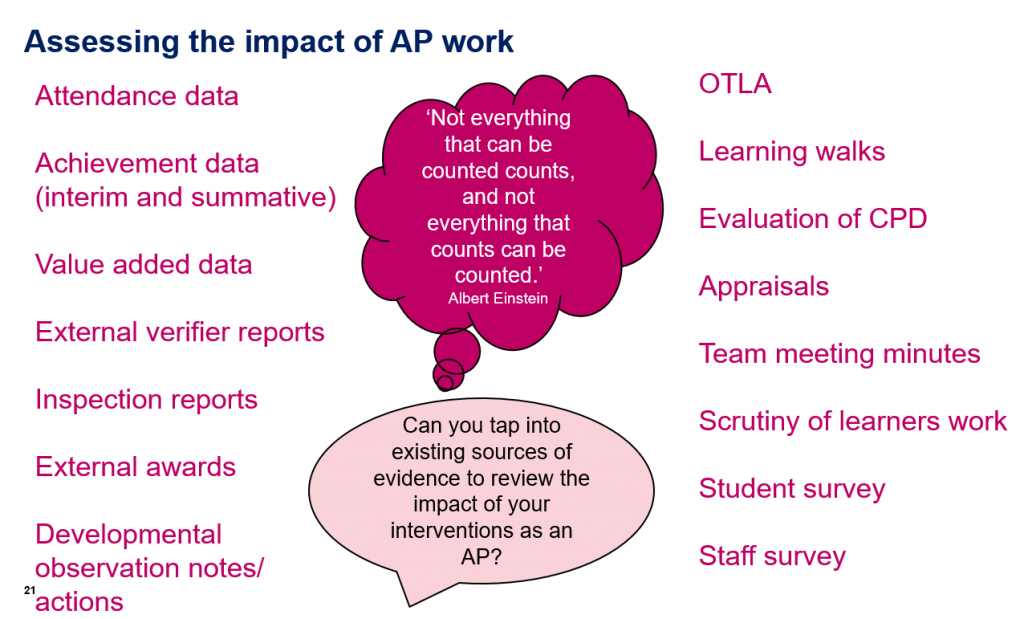

SQW will mine secondary data that already exists. Data that is generated as part of #APConnect’s day-to-day business such as participation data and participant surveys. APs can also mine such every-day data generated by their organisations as long as they can negotiate access and practice an ethics of care. Organisations, after all, have whole teams dedicated to generating such data: MIS, Quality, HR to name a few.

Slide from ‘Developing APs’ day 3 CPD

But APs have to ensure the data they identify and harvest provides evidence for the intervention or initiative they are implementing. For example, if an AP or AP team is supporting new teachers with confidential 1:1 mentoring sessions because too many teachers have left over the last three years then it may be useful to review teacher retention data for that department to see if sessions have made a difference. But again, time is needed for initiatives to embed and ‘impacts’ to surface.

Interestingly, when we asked APs on day three what data they thought ICF, the programme’s year one and two evaluators, would be examining to assess programme impact, top answers included inspection reports and learner success rates.

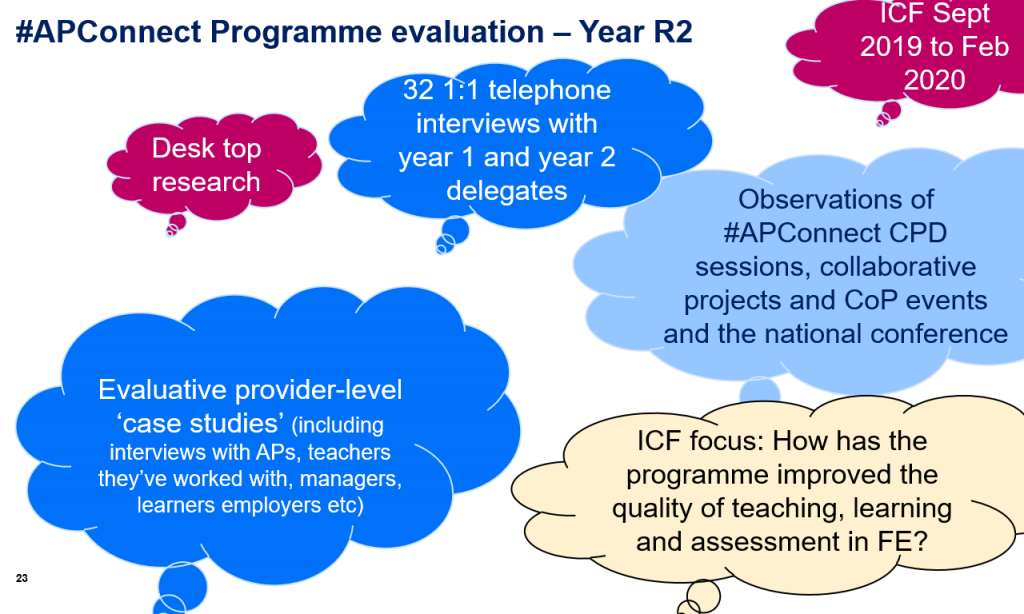

Nope – six months isn’t enough for that type of evidence to surface. Instead the evaluators carried out a range of 1:1 interviews and case study interviews with APs, staff APs were supporting, managers APs were working with to gain a triangulated view as well as direct observations :

As well as tapping into existing data we encourage APs to explore more thoughtful and creative ways of evidencing impact or, I prefer, difference e.g.:

- Teachers meet in CPD time in pairs and reflect on what they have implemented. Comments captured on a Google form or Survey Monkey questionnaire.

- A meeting or webinar to discuss and identify what teachers are doing differently since their development work, its impact on learners and how it can be developed.

- Video clip evidence of methods in action at different stages of the project/experimental practice supported by peer review comments.

- Talking heads video clips of learners’ or teachers’ reflections.

- Written or sound file evidence from the AP/manager/learners about changes to the teacher’s practice and perceived effect.

- A reflective summary or blog by the teacher on how they have changed practice over time; peer reviewed or discussed with the AP.

- Survey or questionnaire sent as an online link to teachers a few weeks afterwards, to identify what has been implemented and their reflections.

- Outcomes and marking records; sampling learners’ files to look for changes

- Reflections on changes in data e.g. attendance, punctuality and retention

- Related comments on the staff and student surveys or appraisal records

- Audit or reflective review of the Schemes of Work or Lesson Plans to look at embedding of methods/approaches into planning and resources

These richer ideas of assessing difference are from a training session run by Joanne Miles as part of #APConnect mentor training.

This year SQW will be carrying out 1:1 interviews with a random sample of participants on #APConnect pathways and case study interviews with APs, teachers APs have worked with, senior leaders, learners and so on to get a triangulated view of ‘impact’. If you are invited to a 1:1 or case study interview please do take up the opportunity. It’s a great way of adding to your own repertoire of evaluating ‘difference’ and of influencing the direction of a national programme.

Thank you